When we talk about cybersecurity, we tend to focus on the interests of businesses, governments, or other organizations. But what about our personal lives, are we at risk from cyber attacks? We definitely are, and we don’t talk enough about it. When people ask cybersecurity experts about what they should do to protect themselves, the answer is often “it depends on your threat model”. This is not false, but also not very helpful. Most people don’t relate to terminology such as threat models, and they have likely never given it much thought. This article is really meant for professionals who need to have discussions with individuals about security, to provide a realistic starting point for the risks we face as individuals, rather than companies.

A threat model is simply a description of the attacks you should expect to be a target of. A good threat model gives you an understanding of:

- Who is the attacker (at least a category)

- What is the motivation of the attacker

- What will the attacker likely do?

Let’s summarize some typical attacks in a table, and then discuss how we can better protect ourselves, or help friends and family protect their digital lives. This is not intended for people who are being targeted by intelligence agencies or professional spies: it is a description of threats that can hit anyone with a digital life.

| Attack | Friends, relatives and service persons | Criminals on the Internet |

|---|---|---|

| Identity theft | Theft of banking ID used to steal money. Signing agreements on behalf of the victim (credit/loans) | User account takeover, and banking ID theft if possible. |

| Spyware on phone or computer | Jealous partners wanting to track your activities, including physical location. | Criminals wanting to steal banking credentials or credit card data. |

| Data theft | Theft of photos, or they may also take the photos and abuse them later for extortion. | Exfiltration of personal data, especially photos. Primary motivation is extortion. |

| Credit card fraud | Use of stored credit card data in web browser | Phishing with the aim to steal credit card information. Hacked web pages, where credit card data is stolen. |

| Cyber extortion | Threats to release private pictures, or sending them to friends and relatives. Less common among people who know each other from before. | Direct threats, typically related to porn habits they claim to have evidence of (in 99% of the cases these are empty threats). Threats about sending stolen pictures to relatives or releasing them on the Internet (more realistic threats). Threats to reveal compromising information to employer or spouse, for blackmail. |

| Malware | Mostly spyware, but also remote access tools to allow remote control of a computer can be used be jealous partners. | Ransomware can still hit individuals, but less attractive as targets for criminals. Malware can be used as a stepping stone to attack corporate networks. |

| Network attack | Not relevant | Criminals attacking vulnerable routers exposed to the Internet, making them part of a global botnet. |

Identity theft

Delegate banking access when needed, don’t share login details, use DNS filtering, install security products with browser protection on phone and computer. Use multifactor authentication everywhere.

Identity theft is a big problem, and is costing individuals large sums of money every year. Particularly the elderly are vulnerable to dishonest relatives and service persons, but this can also happen with younger people. The attacker will then:

- Attempt to steal one-time code dongles, still used by many banks. They may also just use them when not seen by the owner, to avoid causing suspicion.

- Use of a phone app on a borrowed phone to confirm transactions

- Ask for the password to such services with the excuse of being helpful. They may also be given the password to perform online banking on behalf of an elderly person.

The typical result of this type of attack, is that the attacker will transfer money from the victim’s account to their own account. They may also take out a loan, and then transfer the money. Often the loss will not be covered by insurance, because giving access to passwords and access codes is seen as negligence from the victim.

The obvious defense here is to not give out passwords or allow other people to control your bank account. For elderly who need this, access can be delegated by the bank, allowing the helper to use their own identity to perform this work. That is a strong deterrent if the helper is the criminal, as it would be traceable who is performing the transactions. That would also remove the negligence argument from the insurance company, increasing the chance of getting the money back.

For criminals from the Internet, account take-over most often occurs as a phishing attack. The target these days is typically banking details. Common sense cyber hygiene can help, but we all know that people are not always that vigilant. Because of this, it is a good idea to use security products and services to block phishing links. This is not a 100% effective protection but it will remove many threats. If your ISP offers a DNS based filtering service that uses threat intelligence to block threats, turn it on. Alternatively, you may want to set up a similar local service if you don’t trust the ISP. In addition, installing a security product with “safe browsing” features will help block known phishing links. This defense should also be considered for smartphones, as most people surf the Internet more from their phones than computers when at home.

Spyware on phone or computer

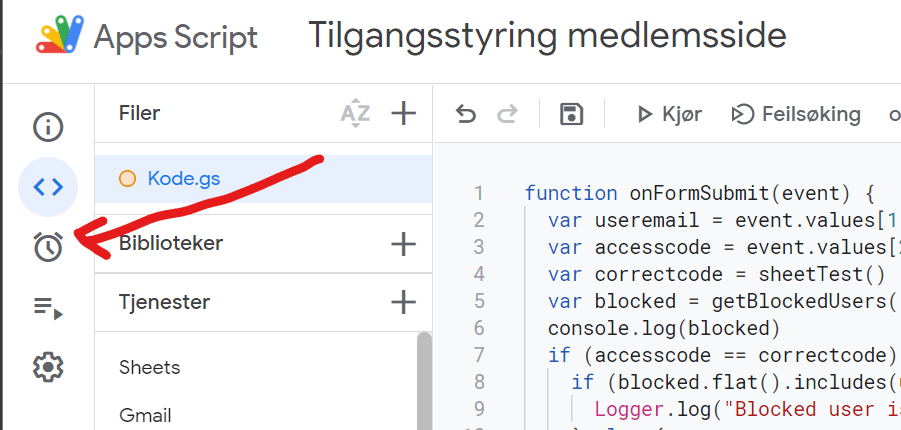

Install security software on your phone to detect spyware. Keep the phone up to date. Avoid installing software from unofficial sources. Check if apps are requesting unreasonable permissions or if they tend to drain your battery.

Spyware is often used by jealous and abusive partners. If you are in a relationship like this, the best course of action is obviously to leave. But even if you do, you would not like the ex to have control over your phone and computer. There are 3 categories of abusive ex trackers to think about:

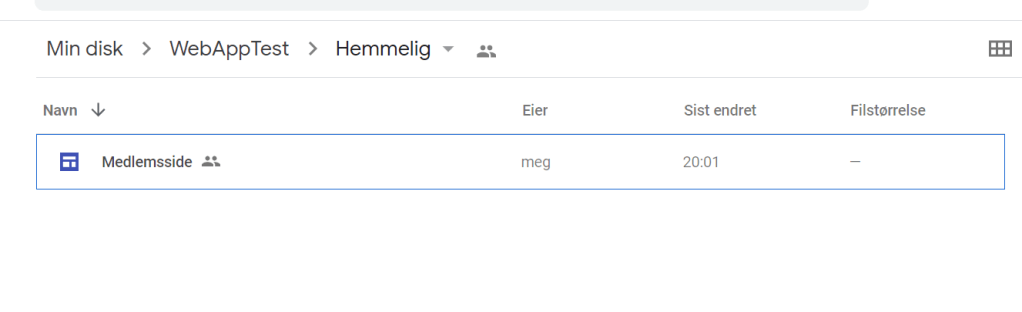

- Joint user accounts that allow tracking the other person. This can be smartphone apps linked to your car, allowing the other person to track your position, it could be shared access to cloud file storage such as OneDrive or Dropbox, and shared calendars. This can also be family sharing features on iPhone and Android phones, that allow tracking location.

- Directly shared passwords. Many partners will share their passwords and pin codes because they trust each other and it is convenient. Others share such details due to social control and negative pressure. In a conflict situation this will be dangerous, and important to get out of as soon as it is safe to do so.

- Actual spyware being installed, often called stalkerware (wikipedia) that allows the attacker to read text messages, track location, etc.

The two first bullet points are the most difficult. We never want to believe that our closest family and partners would abuse trust given to them, but fact is they often do. The best defense here is to be very selective with what is shared, and wherever possible use sharing features that can be turned off instead of sharing a user account.

For the spyware case, security software can be effective in detecting and removing the spyware. In addition, such spyware tends to drain the battery fast because it is always active in the background. Check for apps with high battery usage. Spyware will often masquerade as legitimate apps. If you have apps with an unreasonable number of permissions, this is also a good reason to take a closer look at it, and remove it if you do not know why it needs those permissions.

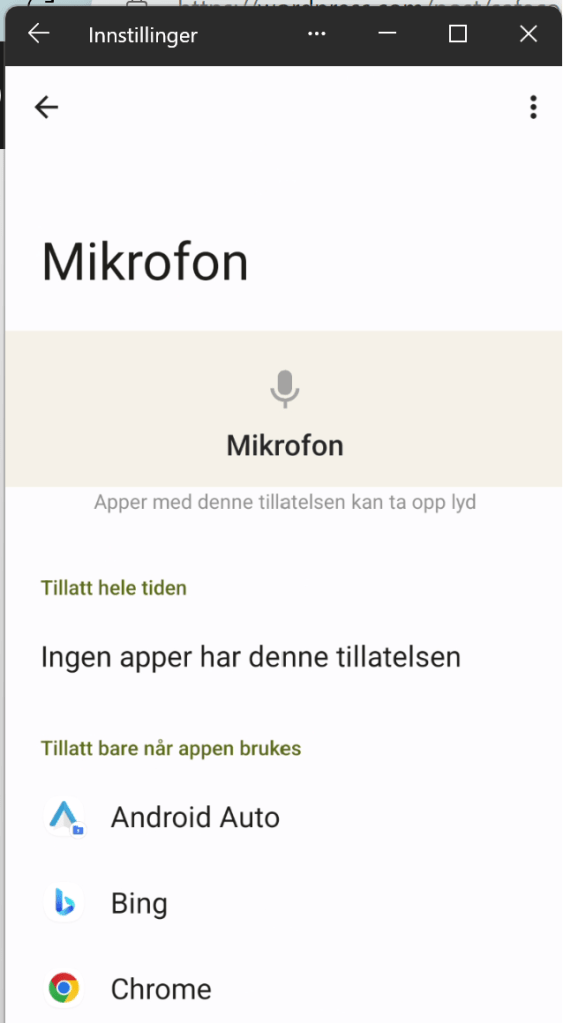

It is therefore a good idea to regularly go through app permissions to make sure you have not granted apps unreasonable access. The permissions that can be granted to apps on smartphones can be quite granular. Spyware will typically want to have access to your calendar, contacts, location, microphone, photos and videos, phone log, and your text messages. If an app asks for any of that without any real reason to do so, be careful.

The last piece of defense here would be to keep your phone up-to-date. Not only does this help avoid exploitation of vulnerable software, it will also make sure you have the latest built-in security features your phone’s operating system has to offer.

Data theft

Use multifactor authentication on all accounts used to share or store sensitive data. Also, store the most sensitive files in extra secure locations. Cloud storage providers may have vault functions with extra security for sensitive data.

For companies, data theft is either about intellectual property, or it is details the company don’t want to be public, that will be abused in extortion schemes. For individuals, it is mostly about extortion, and very ofte private photos. To reduce the risk of theft of your most personal files, it is a good idea to take some extra steps to protect them.

If you use cloud accounts to save your files, several providers offer a vault with extra protection for your most sensitive data. For example, OneDrive offers a Personal Vault, which enforces MFA, has extra restrictions on sharing, and avoids saving local unprotected copies on disk when you access the files. Dropbox also has a Vault feature with similar features.

Many users who have gotten personal files stolen, have experienced this from Snapchat or other social media accounts. Such accounts should be secured with multi-factor authentication. If you have shared very personal photos or files through social media accounts, it is also good to use time-expiring links, as well as preferring secure messaging services if possible. Signal is a good solution.

Credit card fraud

Use credit cards instead of debit cards online. Review the transaction list before paying the bill. Always store credit card data in secure locations.

Credit card fraud is common, both from relatives and service persons, as well as from criminals on the Internet. The people with local access to your cards, can steal the physical card, or use card data stored on your computer. Make sure to only store data in secure locations, such as a password manager, or a vault that requires strong authentication to access. Storing credit card data in text files or spreadsheets is very risky.

It can be a good idea to use credit cards when paying for things online. This way, your bank account cannot be directly drained by criminals, and you can report the fraudulent transactions to your bank quickly. Make it a habit to review all transactions before paying the bill, and contact your bank immediately if you see unknown transactions. Note that many criminals will use a series of smaller transactions instead of one big one, to avoid easy discovery or raising red flags in automated fraud detection systems.

Cyber extortion

Avoid paying criminals as far as possible. Report blackmail attempts to the police. Be vigilant with security of your own files, and be careful with what kind of photos you let other people take of you.

Both criminals and people close to you may use real or fake data to try to blackmail you. A common type of online fraud here, is porn related extortion. A phishing e-mail is sent to you, claiming to have video of you enjoying some adult content, that they will release to the public, or to your friends, if you do not pay them money. This is a scary enough threat for people that many will pay up, even if they know very well that there is no way for the criminals to have such videos of them. Knowing that this is a common scare tactic and fraud, can help people ignore such threats without causing unnecessary anxiety.

Another type of extortion is based on photos. The risk of getting photos stolen is of course lower if you have taken precautions, but there is no way to be completely secure. Of course, other people may also have taken pictures, or even generated them using AI tools or photo editing. In this case, you might experience that the photos are actually published or shared. If this happens, having a plan to cope with it is good. It should also be reported to the police.

Any blackmail attempts should be reported to the police.

Malware

Be careful with messages and links, keep your devices up to date, and use antivirus software.

Malware is any kind of software created for evil purposes, such as data theft, remote controlling a computer, or using your computer as a bot to attack others. You computer in this case can be any of your Internet connected devices, such as your PC, your Wi-Fi router, your smartphone, your connected EV, or even your washing machine.

Most malware is spread through e-mail and social media chats. Being careful with messages is a good starting point. Further, keeping computers and equipment up to date, and running antivirus software where possible is a good way to protect oneself from malware.

Network attack

Remember to update your network devices, and shield them from direct Internet exposure as far as possible.

Criminals on the Internet will run automated attacks on routers. Avoid exposing management ports to the Internet to reduce the risk of this. When a vulnerability that can be exploited automatically is made known, network devices are common targeted in mass exploitation attacks, quickly compromising a large number of devices. This can then be used to attack other companies, or your own workplace. To avoid this, make sure the network devices at home are patched as soon as possible when updates are published.

You can still be hacked

If you consider your threat model, and you make reasonable attempts to be more secure like discussed above, you considerably reduce your risk from cyber attacks, whether they are family member insider threats or bad guys on the Internet. Doing so will, however, not make you invulnerable. You can still get hacked. Because of this, it is also a good idea to have a personal incident response plan. We won’t dive into a detailed story on that, but we should all consider the following:

- What should I have offline backups of, for example on a USB drive, in case all online data is lost/destroyed?

- Who do I need to call if my accounts are compromised? Make a list of your most important contact points for banks, people you frequently interact with, your insurance company, and perhaps others that can help or will need to know.

- Keep some offline emergency resources, such as cash, a notebook with contact information, and perhaps a dumb phone with an extra SIM card

Having all of this in place is the digital equivalent of having smoke detectors and fire extinguishers in your home. It can make a very bad day somewhat less bad. And that has a lot of value in the moment.