When we first started connecting OT systems to the cloud, it was typically to get access to data for analytics. That is still the primary use case, with most vendors offering some SaaS integration to help with analytics and planning. The cloud side of this is now more flexible than before, with more integrations, more capabilities, more AI, even starting to push commands back into the OT world from the cloud – something we will only see more of in the future. The downside of that as seen from the asset owner’s point of view is that the critical OT system with its legacy security model and old systems are now connected to a hyperfluid black box making decisions for the physical world on the factory floor. There are a lot of benefits to be had, but also a lot of things that could go wrong.

How can OT practicioners learn to love the cloud? Let’s consider 3 key questions to ask in our process to assess the SaaS world from an OT perspective!

The first thing we have to do is accept that we’re not going to know everything. The second thing we have to do is ask ourselves, ‘What is it we need to know to make a decision?’… Let’s figure out what that is, and go get it.

Leo McGarry – character in “The West Wing”

The reason we connect our industrial control systems to the cloud, is that we want to optimize. We want to stream data into flexible compute resources, to be used by skilled analysts to make better decisions. We are slowly moving towards allowing the cloud to make decisions that are feeding back into the OT system, making changes in the real world. From the C-Suite, doing this is a no-brainer. How these decisions challenge the technology and the people working on the factory floors, can be hard to see from the birds-eye view where the discussion is about competitive advantage and efficiency gain instead of lube oil pressure or supporting a control panel still running on Windows XP.

Question 1: How can I keep track of changes in the cloud service?

Several OT practitioners have mentioned an unfamiliar challenge: the SaaS in the cloud changes without the knowledge of the OT engineers. They are used to strict management of change procedures, the cloud is managed as a modern IT project with changes happening continuously. This is like putting Parmenides up against Heraclitus; we will need dialog to make this work.

Trying to convince the vendor to move away from modern software development practices with CI/CD pipelines and frequent changes to a more formal process with requirements, risk assessment spreadsheets and change acceptance boards is not likely to be a successful approach, although it may seem to be the most natural response to a new “black box” in the OT network for many engineers. At the same time, expecting OT practitioners to embrace a “move fast and break things, then fix them” is also, fortunately, not going to work.

- SaaS vendors should be transparent with OT customers what services are used and how they are secured, as well as how it can affect the OT network. This overview should preferably be available to the asset owner dynamically, and not as a static report.

- Asset owners should remain in control which features will be used

- Sufficient level of observability should be provided across the OT/cloud interface, to allow a joint situational understanding when it comes to the attack surface, cyber risk and incident management.

Question 2: Is the security posture of the cloud environment aligned with my OT security needs?

A key worry among asset owners is the security of the cloud solution, which is understandable given the number of data breaches we can read about in the news. Some newer OT/cloud integrations also challenge the traditional network based security model with a push/pull DMZ for all data exchange. Newer systems sometimes includes direct streaming to the cloud over the Internet, point-to-point VPN and other alternative data flows. Say you have a crane operating in a factory, and this crane has been given a certain security level (SL2) with corresponding security requirements. The basis for this assessment has been that the crane is well protected by a DMZ and double firewalls. Now an upgrade of the crane wants to install a new remote access feature and direct cloud integration via a 5G gateway delivered by the vendor. This has many benefits, but is challenging the traditional security model. The gateway itself is certified and is well hardened, but the new system allows traffic from the cloud into the crane network, including remote management of the crane controllers. On the surface, the security of the SaaS seems fine, but the OT engineer feels it is hard to trust the vendor here.

One way the vendor can help create the necessary trust here, is to allow the asset owner to see the overall security posture generated by automated tools, for example a CSPM solution. This information can be hard to interpret for the customer, so a selection of data and context explanations will be needed. An AI agent can assist with this, for example mapping the infrastructure and security posture metrics to the services in use by the customer.

💶 Do you enjoy this post? Consider supporting my cloud experiments and hosting costs on Buy me a coffee! ☕

Question 3: How can we change the OT security model to adapt to new cloud capabilities?

The OT security model has for a long time been built on network segmentation, but with very static resources and security needs. When we connect these assets into a cloud environment that is undergoing more rapid changes, it can challenge the local security needs in the OT network. Consider the following fictitious crane control system.

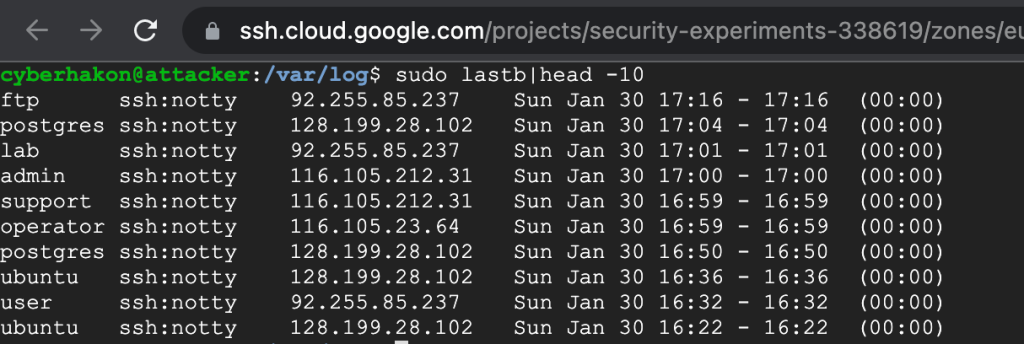

In the situation of the crane example, the items in the blue box are likely to be quite static. The applications in the cloud are likely to see more rapid change, such as more integrations, AI assistants, and so on. A question that will have a large impact on the attack surface exposure of the on-prem crane system here, is the separation between components in the cloud. Imagine if the web application “Liftalytics” is running on a VM with a service account with too much privileges? Then, a vulnerability allowing an attacker to get a shell on this web application VM may move laterally to other cloud resources, even with network segregation in place. These type of security issues are generally invisible to the asset owner and OT practitioners.

If we start the cloud integration without any lateral movement path between a remote access system used by support engineers, and the exposed web application, we may have an acceptable situation. But imagine now that a need appears that makes the vendor connect the web app and the remote access console, creating a lateral movement path in the cloud. This must be made visible, and then the OT owner should:

- Have to explicitly accept this change for it to take action

- If the change is happening, the change in security posture and attack surface must be communicated, so that compensating measures can be taken in the on-prem environment

For example, if a new lateral movement path is created and this exposes the system to unacceptable risk, local changes can be done such as disabling protocols on the server level, adding extra monitoring, etc.

The tool we have at our disposal to make better security architectures is threat modeling. By using not only insights into the attack surface from automated cloud posture management tools, but also cloud security automation capabilities, together with required changes in protection, detection and isolation capabilities on-prem, we can build a living holistic security architecture that allows for change when needed.

Key points

Connecting OT systems to the cloud creates complexity, and sometimes it is hidden. We set up 3 questions to ask to start the dialog between the OT engineers managing the typically static OT environment and the cloud engineers managing the more fluid cloud environments.

- How can I keep track of changes in the cloud environment? – The vendor must expose service inventory and security posture dynamically to the consumer.

- Is the security posture of the cloud environment aligned with my security level requirements? – The vendor must expose security posture dynamically, including providing the required context to see what the on-prem OT impact can be. AI can help.

- How can we change the OT security model to adapt to new cloud capabilities? We can leverage data across on-prem and cloud combined with threat modeling to find holistic security architectures.

Do you prefer a podcast instead? Here’s an AI generated one (with NotebookLM):

Doing cloud experiments and hosting this blog costs money – if you like it, a small contribution would be much appreciated: coff.ee/cyberdonkey